What is MCP (Model Context Protocol)? Clearly Explained!

MCP (Model Context Protocol) is a new standard that enables large language models to discover, understand, and call external tools autonomously—without plugins or brittle API glue code.

What Problem Does MCP Solve?

If you’ve ever tried getting an AI to do something practical—look up some data, send an email, run a workflow—you’ve probably run into a frustrating limitation: AI can’t actually use the tools we rely on every day. It can generate the right command, explain what to do, even imitate the tone of someone who knows what’s going on—but when it comes to actually doing things in the world, it’s stuck.

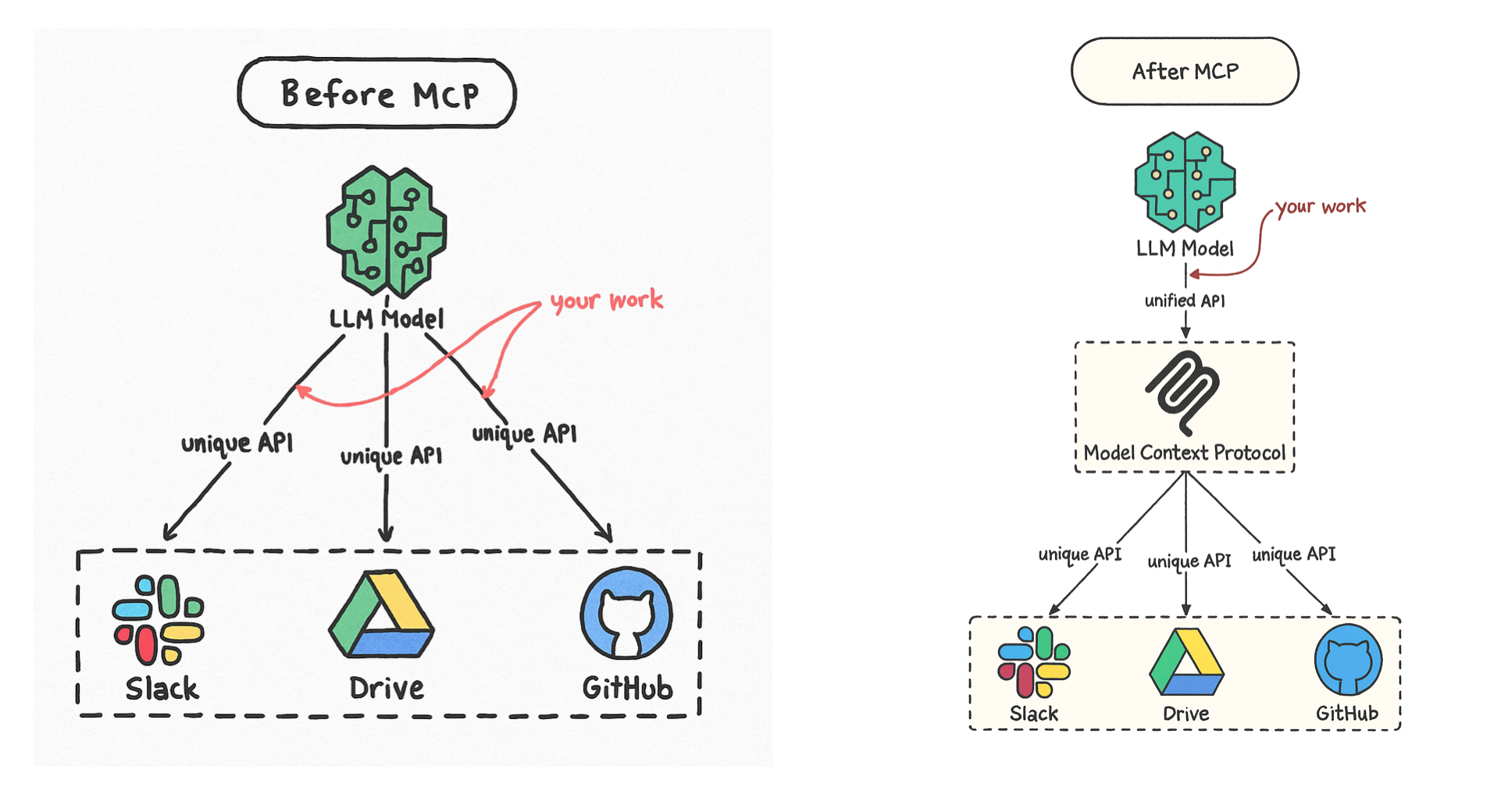

Before MCP, there were only two ways to get around this. One was the plugin model, like GPTs. Every time you needed to connect to an external service, you had to manually build a plugin and attach it to a specific AI platform. If the platform changed or the API broke, the plugin failed. It was brittle, high-maintenance, and full of friction. Switching platforms or tools meant starting over, and developers spent more time wiring things together than solving actual problems.

The second was integrating APIs directly. That gave you more control—but also more headaches. Once your system touched more than a few services, it became a tangle of permissions, error handling, state management, and glue code. Every new tool added complexity, coupling, and risk. And crucially, neither approach gave the AI any real understanding of the tools it was supposedly using. The AI just sat on top, as a kind of language front-end. It could call functions, sure, but only because a human had hardcoded what those functions were and how they should be used. There was no autonomy. No planning. No composition.

That’s the problem MCP was built to solve.

MCP (Model Context Protocol) introduces a new kind of interaction: one where the model doesn’t just use tools—it understands them. It provides a shared protocol, a kind of machine-readable contract, that describes what tools exist, what they can do, and how they should be invoked. Instead of being spoon-fed specific API calls, the model reads the descriptions and figures out for itself how to proceed. It can decide when to use a tool, how to combine tools, and how to adjust its plan based on the environment.

This changes everything. With MCP, the model moves from passive execution to active orchestration. It can reason about its environment. It can plan. It can explore. It no longer depends on human developers to hold its hand through every step of the process. And that autonomy—the ability to make meaningful decisions about when and how to act—is what makes real tool use possible.

In short, MCP solves one of the most fundamental constraints of current AI systems:

how to let large models use tools and external data on their own terms—without being boxed in by plugins or buried under layers of glue code.

What is MCP (Model Context Protocol)?

MCP stands for Model Context Protocol. It’s a standardized way to build and manage the “context” that large language models (LLMs) use when generating answers.

MCP isn’t just another framework. It’s a design philosophy built on three principles: ➡️ Standardized interfaces ➡️ Clear separation of responsibilities ➡️ System decoupling for flexibility and reuse

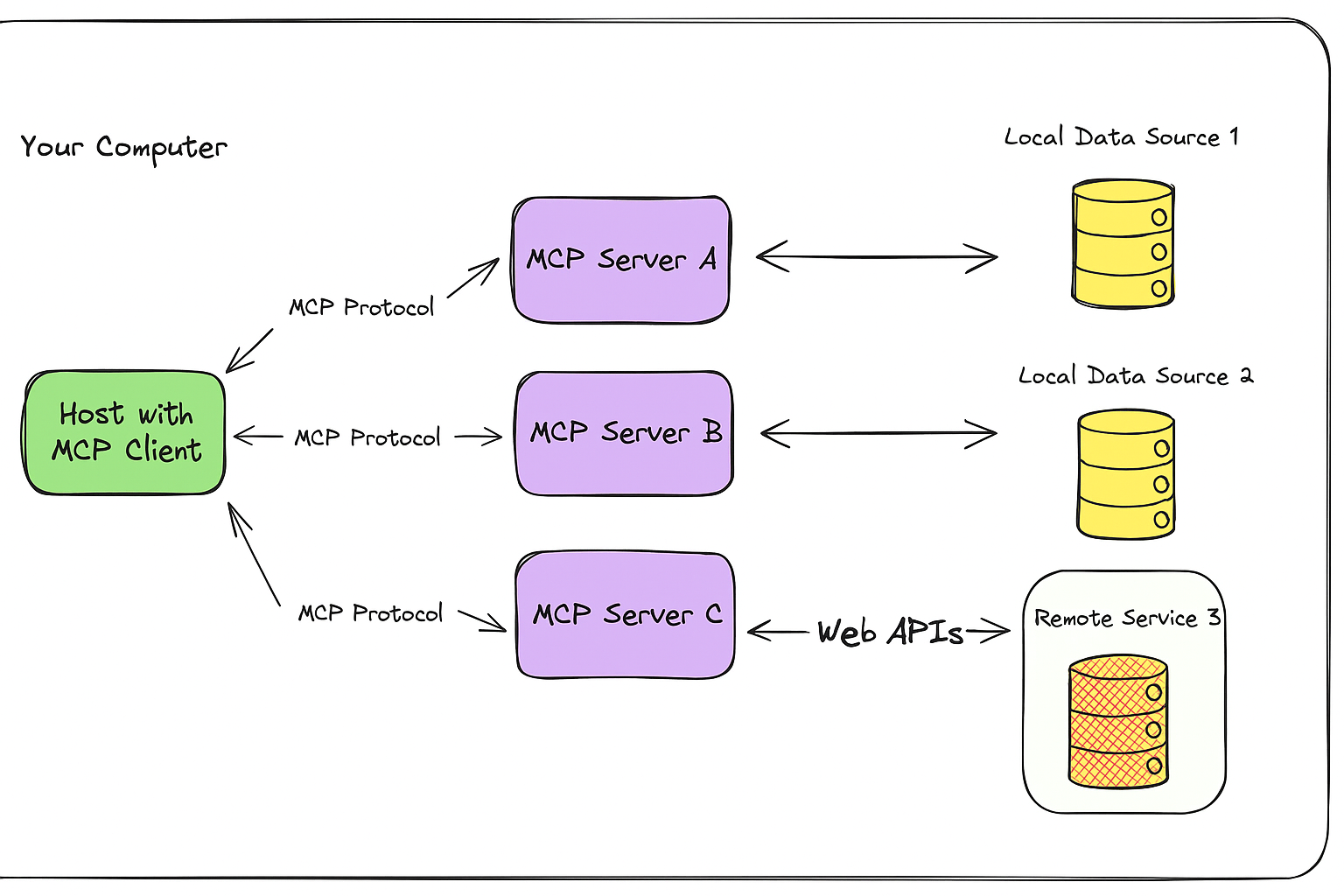

Instead of writing glue code for every service your AI touches, MCP introduces a modular architecture—splitting the system into three coordinated parts:

🧩 The Three Pillars of MCP

1. MCP Protocol

A shared language for communication—the specification that defines how requests and responses should look.

2. MCP Host (with Client)

The AI’s control room. This is where decisions are made, context is tracked, and tools are dispatched.

3. MCP Servers

Tool wrappers. Each one exposes a specific service (GitHub, Google Drive, etc.) in a machine-friendly, structured format.

Each part is self-contained, but together they form a system that’s cohesive, extendable, and developer-friendly.

🧠 MCP Host: The AI’s Orchestration Brain

The MCP Host is where everything converges. It lives inside your AI platform, agent system, or custom assistant, acting as the command center for external tool use.

It does more than just pass messages—it manages state, picks tools, dispatches requests, and integrates results.

Key responsibilities:

- Capability discovery: After startup, the host uses its embedded MCP Client to scan for available servers—either local or remote—and pulls a list of usable tools.

- Context management: It tracks the conversation flow, previous calls, user intent—everything the AI needs to act coherently.

- Tool invocation: When the AI wants to take action, the Host formats and sends the request.

- Response aggregation: It handles replies from multiple servers, synthesizing them into a useful summary the AI can reason with.

🔍 Example

Suppose you ask the AI:

“List my GitHub pull requests and give me a summary.”

Here’s what happens under the hood: The Host locates the GitHub MCP Server, and maybe a File MCP Server. It calls both in sequence—fetching the PRs, then passing them to a summarization tool.

🔗 MCP Client: The Bridge Between AI and Tools

Sitting inside the Host, the MCP Client is the protocol executor. It handles formatting, dispatching, and receiving all MCP-related communications.

Think of it as the translator and scheduler: it turns the AI’s high-level intent into standardized protocol messages, then routes them to the right server.

Responsibilities:

- Capability fetching: On first contact with a server, the Client requests its list of capabilities—what it does, what it expects, what it returns.

- Request handling: It builds and sends structured JSON-RPC requests, in compliance with the MCP Protocol.

- Response parsing: It unpacks the results and hands them to the Host.

- Error resilience: Timeouts, malformed responses, permission issues—handled gracefully, with retries or fallback logic.

Deployment: The Client is typically embedded as an SDK inside the Host. Languages like Python and TypeScript are already supported, making integration straightforward.

🛠️ MCP Server: Making Tools AI-Usable

The MCP Server is the interface layer between real-world tools and the AI. It wraps things like APIs, scripts, or internal services in a way the AI can understand and reliably invoke.

What it provides:

- Abstraction: AI doesn’t see raw API endpoints—it sees structured capabilities with defined inputs and outputs.

- Consistency: No need to reformat or special-case anything; everything speaks the same protocol.

- Encapsulation: Tool-specific logic stays hidden. The AI doesn’t care how you talk to GitHub—it just sends a request.

🧪 Example Servers

| Server Type | What It Does |

|---|---|

| GitHub Server | Handles PR listings, translates them to GitHub API calls |

| File Server | Takes “write this text” instructions and saves them locally |

| YouTube Server | Accepts links, returns video transcripts |

🧬 MCP Protocol: A Shared Language for AI ↔ Tools

At the heart of it all is the MCP Protocol—the rulebook that makes this entire system interoperable.

It defines:

- Request formats

- Response structures

- Error reporting conventions

- Capability metadata descriptions

Why this matters:

- No need to rewrite integration logic per tool

- Local scripts and remote APIs behave the same

- AI can automatically discover how to use new tools

Once a server implements the protocol, any compliant client can talk to it—no extra wiring required.

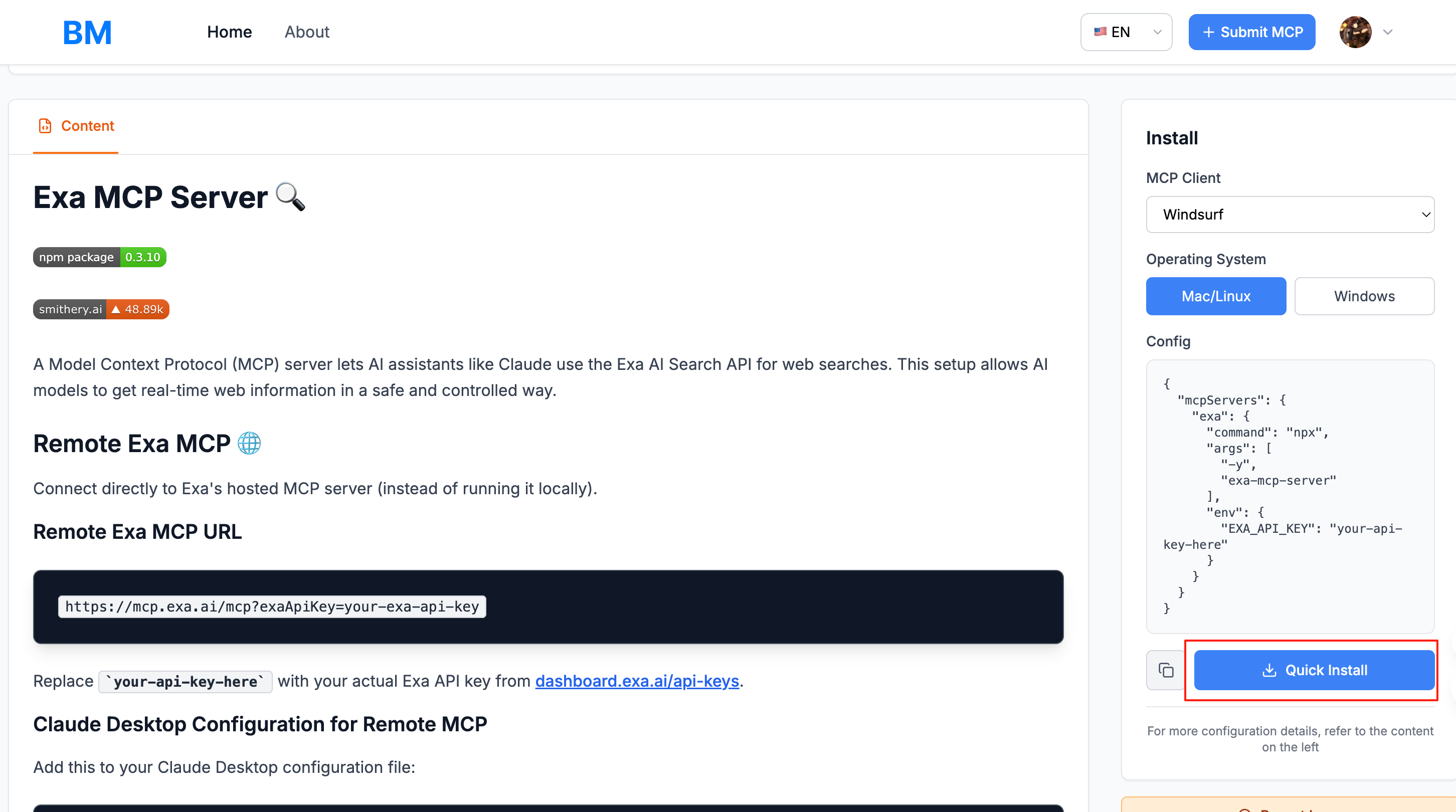

How Do You Actually Use an MCP Server? (With Cursor as an Example)

Let’s say you’re working inside Cursor, and you want your AI assistant to help you search for high-quality, developer-relevant results—not just scrape the web like a generic search engine.

You type a prompt like:

“Search for practical examples of how to debounce a function in JavaScript.”

Simple request, right? But what actually happens behind the scenes is an interaction with a specialized tool: the Exa MCP Server—a powerful AI-enhanced search interface.

Here’s what that workflow looks like:

You open the MCP Server marketplace → https://bestofthemcp.com

You browse the catalog and select the “Exa MCP Server”, purpose-built for dev-focused semantic search

You copy its configuration and paste it into Cursor’s

mcp.config.json

After setup, Cursor automatically boots up the Exa service in the background

Now, you type in:

“Find recent TypeScript guides that include code examples for setting up tRPC.”

Cursor recognizes this as a search task, and instead of doing a basic web scrape or keyword query, it delegates the request to the Exa MCP Server

The Exa Server processes the query using semantic ranking, code-oriented context, and real-time indexing, then returns a list of highly relevant sources

Cursor formats the result, possibly summarizing or linking top hits inline

You see:

✅ Found 5 high-quality examples. Want to open the first one?

And just like that, you’re working with search results that actually respect your developer intent—not just noisy search engine links.